The non-Hermitian Lanczos method as presented in

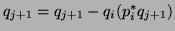

Algorithm 7.13 below is a two-sided iterative algorithm

with starting vectors ![]() and

and ![]() .

It can be viewed as biorthogonalizing, via a two-sided Gram-Schmidt

procedure, the two Krylov sequences

.

It can be viewed as biorthogonalizing, via a two-sided Gram-Schmidt

procedure, the two Krylov sequences

![\begin{displaymath}

T_j=\left[

\begin{array}{ccccc}

\alpha_1 & \gamma_2 &&&\\

\...

...ts&\gamma_{j}\\

&&&\beta_{j}&\alpha_j\\

\end{array} \right].

\end{displaymath}](img2176.png)

To each Ritz value

![]() there correspond right

and left Ritz vectors,

there correspond right

and left Ritz vectors,

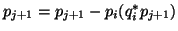

We will now comment on certain steps of Algorithm 7.13:

In practice, an exact null vector is rare. It does happen that

the norms of ![]() and/or

and/or ![]() are tiny. A tolerance value to

detect a tiny

are tiny. A tolerance value to

detect a tiny ![]() compared to

compared to

![]() or

a tiny

or

a tiny ![]() compared to

compared to

![]() should be given. A default

tolerance value is a small multiple of

should be given. A default

tolerance value is a small multiple of ![]() , the machine precision.

, the machine precision.

In practice, exact breakdown is rare.

Near breakdowns occur more often; i.e., ![]() is nonzero but

extremely small in absolute value. Near breakdowns cause stagnation

and instability.

Any criterion for detecting a near breakdown either must

stop too early in some situations or stops too late in other cases.

A reasonable compromise criterion for detecting near breakdowns

in an eigenvalue problem

is to stop if

is nonzero but

extremely small in absolute value. Near breakdowns cause stagnation

and instability.

Any criterion for detecting a near breakdown either must

stop too early in some situations or stops too late in other cases.

A reasonable compromise criterion for detecting near breakdowns

in an eigenvalue problem

is to stop if

![]() .

.

No stable algorithm has been found for an eigenvalue problem that

approximates the eigenvalues of a general tridiagonal in ![]() floating point operations, though

recently conditionally stable algorithms such as [94,189]

have been proposed. No software implementation of the Lanczos

algorithm known to the authors employs a fast conditionally stable eigensolver.

floating point operations, though

recently conditionally stable algorithms such as [94,189]

have been proposed. No software implementation of the Lanczos

algorithm known to the authors employs a fast conditionally stable eigensolver.

If there is no re-biorthogonalization (see [105]),

then in finite precision arithmetic

after a Ritz value converges to an eigenvalue of

![]() , copies of this Ritz value will appear at later Lanczos steps.

For example, a cluster of Ritz values of the reduced

tridiagonal matrix,

, copies of this Ritz value will appear at later Lanczos steps.

For example, a cluster of Ritz values of the reduced

tridiagonal matrix, ![]() , may approximate a single eigenvalue of the original

matrix

, may approximate a single eigenvalue of the original

matrix ![]() .

A spurious value [93]

is a simple Ritz value that is also an eigenvalue of the

matrix of order

.

A spurious value [93]

is a simple Ritz value that is also an eigenvalue of the

matrix of order ![]() obtained by deleting the first row and column

from

obtained by deleting the first row and column

from ![]() .

Such spurious values should be discarded from consideration.

Eigenvalues of

.

Such spurious values should be discarded from consideration.

Eigenvalues of ![]() which are not spurious are identified

as approximations to eigenvalues of the original matrix

which are not spurious are identified

as approximations to eigenvalues of the original matrix ![]() and

are tested for convergence.

and

are tested for convergence.

for

end for

Applying the TSMGS process at each step is very costly and becomes a computational bottleneck. In [105], an efficient alternative scheme is proposed. This topic is revisited in §7.9.