In this section, we combine the ideas of the inexact Cayley

transformation,

the (Jacobi) Davidson method, and the rational Krylov method.

Roughly speaking, we still have the same method as the inexact Cayley

transform Arnoldi method or the preconditioned Lanczos method, with the

only

difference that

the zero ![]() and the pole

and the pole ![]() may be updated on each iteration.

As with the preconditioned Lanczos method,

the Ritz vectors are computed from the Hessenberg matrices.

In addition, the Ritz values are also computed from the recurrence

relation.

may be updated on each iteration.

As with the preconditioned Lanczos method,

the Ritz vectors are computed from the Hessenberg matrices.

In addition, the Ritz values are also computed from the recurrence

relation.

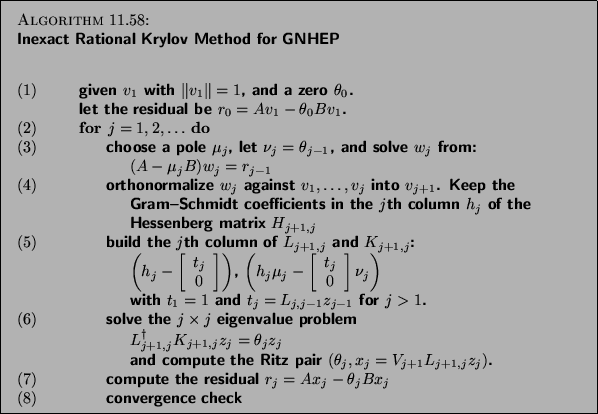

We now discuss the method in detail, using the algorithm of the rational Krylov method, designed for the inexact Cayley transforms, given below.

Let us analyze this algorithm step by step.

The solution of the linear system leads to the relations

(11.2) where ![]() is a Ritz vector

and

is a Ritz vector

and ![]() is the associated Ritz value

is the associated Ritz value ![]() from the

previous

iteration, such that

the right-hand side is the residual

from the

previous

iteration, such that

the right-hand side is the residual

![]() .

Since

.

Since

![]() , we can also write

, we can also write

![]() , where

, where

![]() is called the continuation vector.

After the Gram-Schmidt orthogonalization, we have

is called the continuation vector.

After the Gram-Schmidt orthogonalization, we have

![]() , so we can rewrite (11.2) as

, so we can rewrite (11.2) as

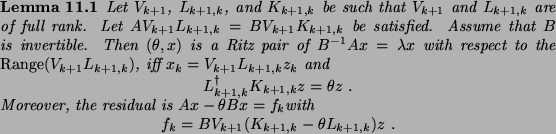

Before we continue, we must give some properties of the exact rational

Krylov method.

The following lemma explains how to compute Ritz values in the rational

Krylov method.

As with the (Jacobi) Davidson method, the RKS method applies an inexact

Cayley transform to a vector.

The difference lies in the way the Ritz pairs are computed.

In the (Jacobi) Davidson method, the Ritz pairs result from a Galerkin

projection of

![]() on the subspace.

In the RKS method, the Ritz pairs are computed from the recurrence

relation

using Lemma 11.1, assuming that

on the subspace.

In the RKS method, the Ritz pairs are computed from the recurrence

relation

using Lemma 11.1, assuming that ![]() .

.

With the inexact Cayley transform,

the transformation can be a large perturbation of the exact Cayley

transform,

but can still be used to compute one eigenpair, when ![]() is

well chosen.

The same is true here.

The inexact rational Krylov method delivers an

is

well chosen.

The same is true here.

The inexact rational Krylov method delivers an ![]() (or

(or ![]() ) that is

not

random but small in the direction of the desired eigenpair, as long as the

various parameters are properly set.

) that is

not

random but small in the direction of the desired eigenpair, as long as the

various parameters are properly set.

A Ritz pair ![]() computed by Algorithm 11.4 produces residual

computed by Algorithm 11.4 produces residual